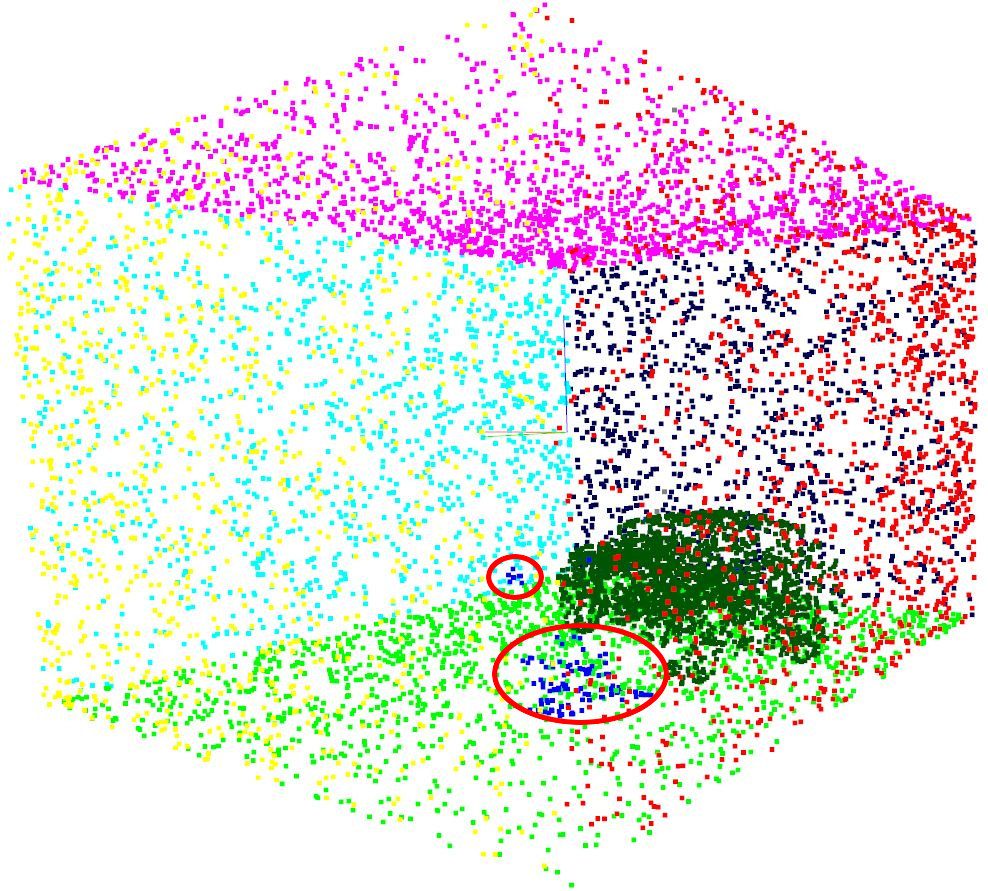

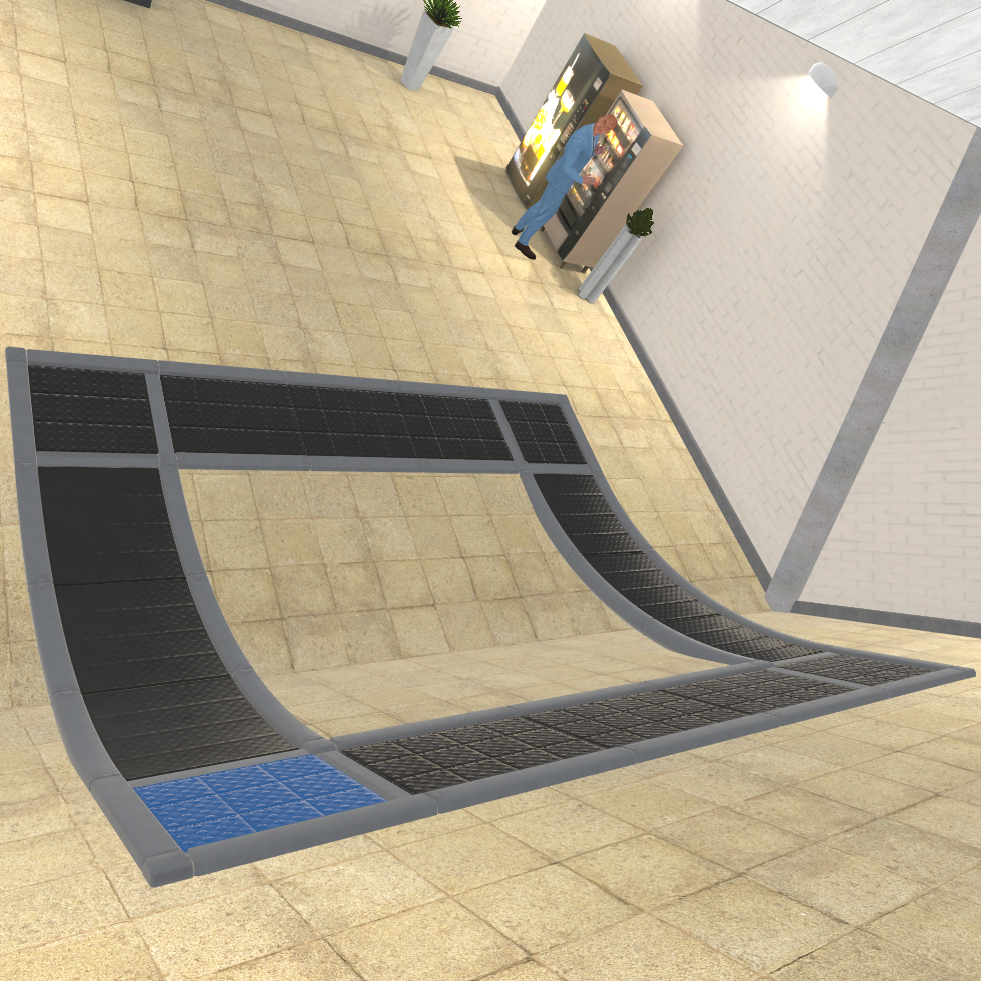

Following to an implantation of an artificial knee joint, patients have to perform rehabilitation exercises at home. The motivation to exercise can be low and if the exercises are not executed, an extended rehabilitation time or a follow-up operation is possibly required. Moreover, incorrect exercise executions over a long period can lead to injuries. Therefore, we present two Programming by Demonstration (PbD) algorithms, a Nearest-Neighbour (NN) model and the Alpha Algorithm (AlpAl), for measuring the quality of exercise executions, which can be used in order to give feedback in exergames.