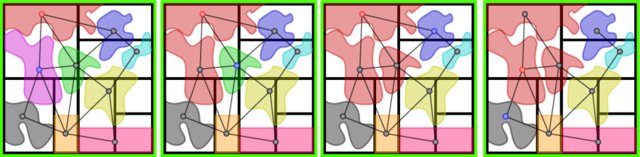

We propose a method to segment a real world point cloud as perceptual grouping task (PGT) by a deep reinforcement learning (DRL) agent. A point cloud is divided into groups of points, named superpoints, for the PGT. These superpoints should be grouped to objects by a deep neural network policy that is optimised by a DRL algorithm. During the PGT, a main and a neighbour superpoint are selected by one of the proposed strategies, namely by the superpoint growing or by the smallest superpoint first strategy. Concretely, an agent has to decide if the two selected superpoints should be grouped together and receives reward if determinable during the PGT. We optimised a policy with the proxi-mal policy optimisation (PPO) [SWD + 17] and the dueling double deep q-learning algorithm [HMV + 18] with both proposed superpoint selection strategies with a scene of the ScanNet data set [DCS + 17]. The scene is transformed from a labelled mesh scene to a labelled point cloud. Our intermediate results are optimisable but it can be shown that the agent is able to improve its performance during the training. Additionally, we suggest to use the PPO algorithm with one of the proposed selection strategies for more stability during the training.