Technology is an integral part of today’s life. The “Personal Internet of Things” has become reality and consists of connected devices such as smartwatches, smartphones and smart glasses. These are communication interfaces that will become increasingly more advanced over time.

The changes of progressive digitization through connected Smart devices are also an interesting and promising field of research for the production economics. Interconnected machines, robots, tools, and humans create cyber-physical systems (CPS) and have the potential to significantly accelerate the product lifecycle management. Humans will operate within those systems as flexible problem solvers and intelligent decision makers. However, an ever-growing number of connected devices and advanced sensors within the “Industrial Internet of Things” create enormous amounts of data, which must be understood and managed by the decision makers. Therefore, the communication with the CPS needs to be easy, intuitive, and efficient.

The goal of iKPT 4.0 is to develop and investigate new ways of interaction with complex data of the “Industrial Internet of Things” in order to shorten production times and be able to flexibly react to problems and changes in the production chain as well as to dynamically create different variants of a product (custom-made manufacturing). In this context, novel technologies are used in order to create new multi-modal interaction paradigms that allows for much faster and easier perception of complex data and much more intuitive interaction for managing those data.

The project iKPT 4.0 is divided into three major parts with the following topics in detail:

M1) User-centered adaption of the working space in the CPS: the worker’s state is observed via physiological and body-tracking technologies which can also be employed to measure the environment. Based on the retrieved information, digital content as well as visualizations are appropriately processed for the worker. The result will be an interactive and adaptable system for the users.

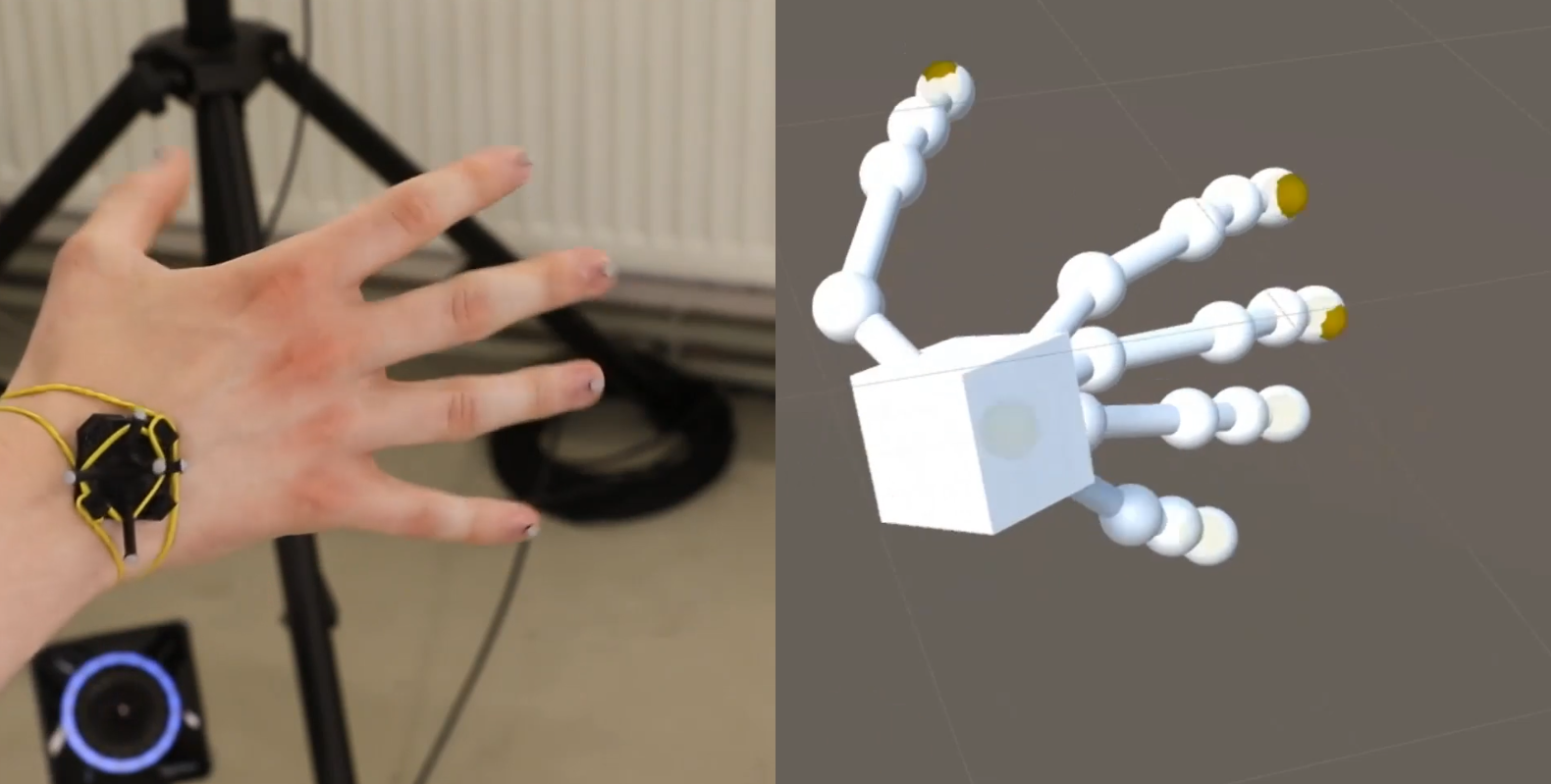

M2) Multimodal interaction for cooperative human-robot interaction: in the field of series production (e.g. automotive industry), actions of humans and robots need to be synchronized and coordinated in order to establish the human as cooperation partner of the robot. This cooperation should also be possible in unpredictable situations.

M3) Design engineering through mixed reality technologies: this part enhances the design process of technical products, services and systems with digital, innovative augmented- and virtual-reality solutions. A user-centered design process will be used in order to develop pre-visualization tools (sketches, modeling, illustration, simulation) to simulate the human-machine cooperation in a collaborative manner.

All parts will be processed in similar steps:

- empathise: come in contact with a specific working environment/field,

- define/analyze: analyze the empathize phase and define problems,

- ideate: create ideas to solve the problems with suitable methods (e.g. co-design workshops),

- prototyping: practical solution, and

- testing: quantitative and qualitative user evaluation.

These steps must be individualized for each project part. Moreover, adaptable solutions will be approached through iterative processes.

To sum up, the project focuses on facilitating the human work in the industrial production and on the usage of body-near interaction in Industry 4.0. Existing technologies will be enhanced and used in novel combinations with appropriate methods to figure out innovative solutions, which will then be tested in industry.