DISTEL – data-driven, intelligent storytelling with robots

Innovative AI technologies use data to tell multimedia stories that can be experienced emphatically by means of immersive VR/AR technologies and interactive robotics.

Because of the democratization of intelligent digital technologies, the ubiquitous access to them, and the emergence of new target groups such as the “Generation Z”, the user behavior is changing. This includes the intake, processing, usage, and transfer of knowledge, information, and data. Examples such as automated journalism, ubiquitous information visualization/Big Data, and immersive storytelling (e.g. news or VR entertainment) have found their way into every domain of knowledge transfer. One especially important aspect is the development of intelligent data-driven approaches that use techniques of data science (DS) and artificial intelligence (AI) to render complex data analyses intelligible.

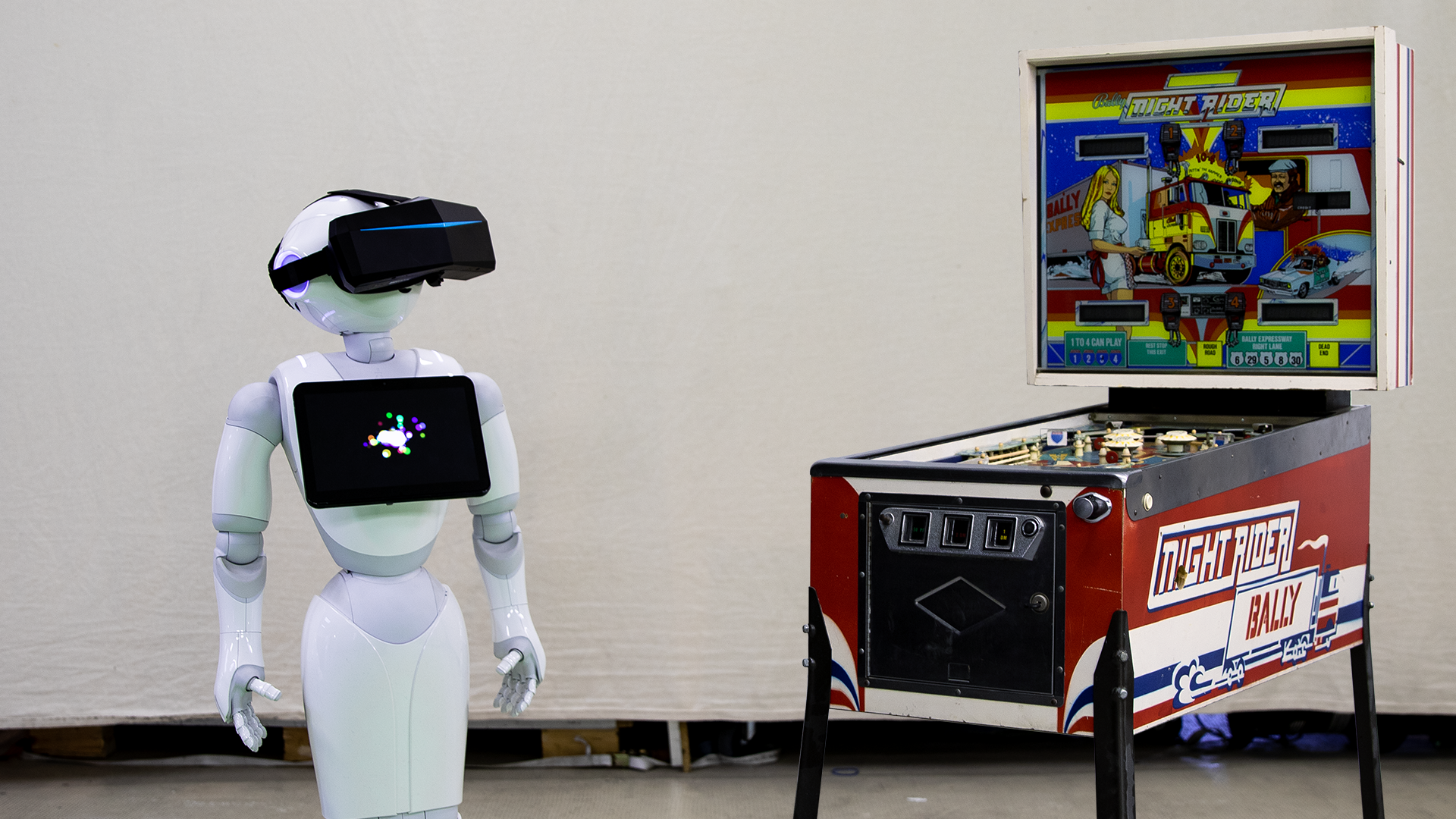

The goal of the project consortium DISTEL is to implement a flexibly usable demonstrator for data-driven, nonlinear storytelling by means of immersive and intelligent technologies. We are developing two intelligent communication partners (using the real robot Pepper and a virtual 3D avatar) that realize different complex communication scenarios by using the latest AI technologies. DISTEL is intended to prove that the combination of immersive and intelligent technologies can result in new forms of storytelling that have synergistic unique features for the domains of data science/artificial intelligence, digital storytelling and human-technology interaction/mixed reality.

Within the field of AI/data science, DISTEL adopts modern procedures of machine learning/deep learning as well as intelligent dialog modeling to extract relevant information by using efficient data-science analyses in complex and heterogeneous databases. Based on a library of narrative story patterns and suitable multimodal interaction techniques, DISTEL supports the production of immersive storylines that create a provably positive user experience. The prototype of a smart conversational agent is being developed and validated in various scenarios that are based on the partners’ demands. After the end of the funding, these partners will use the results to implement follow-up projects.

Partners: The interdisciplinary team includes scientific and economic partners who operate at the interface between artificial intelligence, data science, and digital media. The Hochschule Düsseldorf (HSD) is an experienced research partner in the fields of digital media, human-technology interaction and mixed reality. LAVAlabs Moving Images is a very experienced project partner regarding R&D purposes, is particularly responsible for ambitious moving image productions and offers extensive knowledge about immersive visualization. As AI experts for digital media content, storytelling and any other required machine-learning activity, the start-ups Cognigy and Nyris from Dusseldorf bring their expertise and their technologies into the project. All partners aim to refine the project’s results by using them in different follow-up projects.