In this project we introduce a novel interface combining spatial and tangible interaction for creating audio-visual effects in a natural and engaging way. We demonstrate the setup by an artistic installation for creating ambient sound scapes that are controlled by a Lattice Boltzmann based particle simulation running through a deformable landscape.

As there is no direct analogy between sculpting a sand surface and creating sound, so it was possible to design this approach freely. The concept for sound synthesis was developed considering following criteria:

- Easy to understand reaction of the system to input of the user, allowing a deliberate control of produced sound.

- Correspondence between visual effects and audio.

- New approach, that is different to existing projects.

- Use both AR content and sand surface to control the system.

- Create a system that is fun to interact with.

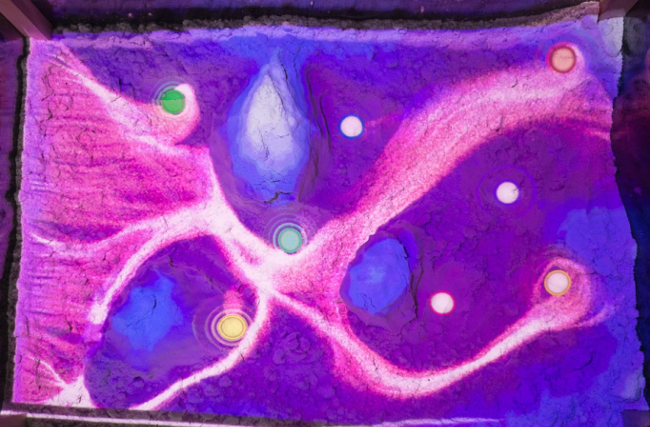

To achieve these criteria we decided to use a fluid simulation the moves particles from left to right through the sands topography in a natural and naive to understand way. Up to eight virtual AR sound points are placed onto the surface by the user and are stimulated by these particles to emitsound. Each particle that hits an AR point is absorbed and the rate of incoming particles of every point can be mapped to an arbitrary sound parameter. In our system we simply chose to control the volume of prede ned audio samples as it is an easy way to interact with music. For advanced requirements we added OSC-support as a way to control other applications especially dedicated to creating music or sound. To simulate real word behavior we use a two dimensional Lattice Boltzmann simulation (LBM) in a D2Q9-model running on the GPU to create water-like flows of particles. Hills and steep horizontal edges are drawn onto a texture that is used by the simulation as obstacles. LBM allows controlling the degree of trubulences by a single relaxation parameter which creates both predictable and visual interesting flow effects. The direction and velocity of the fluid is stored for each pixel in a fluid map. Additionally we create a gradient map of the topography that describes the direction of the steepest slope for each point and pull particles towards this direction. Further, particles are attracted to sound point with a force similar to gravity. These forces calculated, weighted and added for each particle to form a combined force that determines the flow direction of each particle.

Interaction with the system is based on three different concepts: First, it is possible to interact with the kinetic sand in a very natural way, like a child playing in a sand box. The user can sculpt the surface with both hands and watch the resulting changes of the particle flow. He can construct walls or hills that deflect the particles onto AR points or away from them. Forming holes causes particles to accumlate and digging ditches allows a precise control of the particle flow.

Second, the user controls AR content and settings from the on body menu which is projected onto the left hand if its palm is facing upwards and its is thumb spread away. This pose is unlikely to occur during interaction, but easy to achieve deliberatley, when the user wants to use the menu. For interaction we use a graphical menu projected onto the users hand controlled by touch and swipe events. Considering todays wide spread of mobile devices like smartphones and tablets, control by touch and swipe is an easy to learn interaction technique for many potential users. Using this menu, the user can add new AR points onto the sand or remove existing points. Additionally the user can select one of two presets of sound and visual presentation, one calm setting with nice and warm sound together with blue and violet colors, and a sinister setting with dark and unpleasant sound and a red and black color scheme. Last, the user can perform gestural input. Depending on the operating mode, the user can delete or remove existing points. In order to do so, the user can select AR points by pointing a finger at them, just like indicating specific objects in the real world. After a small delay, to avoid the midas touch problem, the selected point is either removed or attached to the selecting finger to be placed somewhere else. Placing a AR point is handled in a similar way by pointing so the desired location. Further, the user can push particels with hand swipes in any direction or use his hand to temporarily block the particle flow.

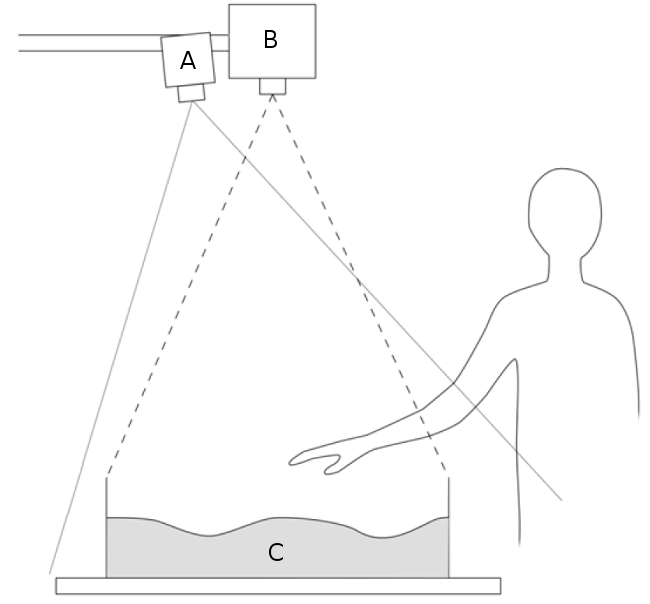

Our sandbox uses a setup simliar to Piper and Ishiis Illuminating clay [1]. We use a 0.6m x 0.4m plexi glass box that is filled with 0.10m kinetic sand (C). We chose kinetic sand as haptic medium because of the interesting haptic features and its easy formability into firm structures. We placed a kinect 2 sensor (A) and a pico projector (B) 1.2 m above the sand surface, both facing downwards.

References

[1] – B. Piper, C. Ratti, and H. Ishii. Illuminating clay: a 3-d tangible interface for landscape analysis. Proceedings of the SIGCHI conference on Human factors in computing systems, pages 355-362, 2002.

Contact

Bastian Dewitz, Roman Wiche, Christian Geiger, Frank Steinicke, Jochen Feitsch