In several years of development, a complex installation was created, with which a fully synthesized operatic vocal part can be controlled via body gestures and facial expressions. The project now includes additional options for lighting control and crowd participation.

The vocal synthesis mimics the sound of a tenor opera voice from the early 20th century. The vocals are fully synthesized using the Max/MSP(1) software to provide full control over volume, pitch, vibrato and vowels. Here, the volume, pitch and vibrato are controlled by the arms; the desired vowel is formed by mouth. For this purpose, both the body and the face of the user must be tracked.

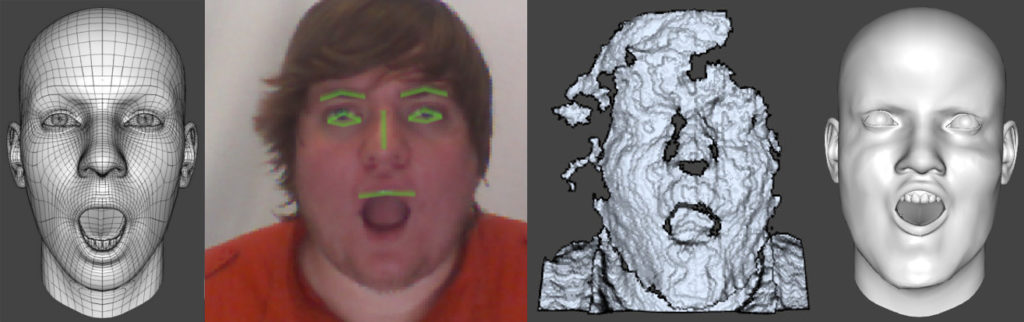

The body tracking takes place by means of Perception Neuron(2). Perception Neuron is a suit that is equipped with IMUs and, for this application, is attached to the upper body. By stretching the arms, the volume can be controlled – close to the body for quiet, stretched out for loud; the pitch is controlled by arm height and a supporting algorithm; and by rotating the hands, the vibrato of the voice can be determined. Face tracking takes place through Facegen(3). Facegen uses a depth camera, a PrimeSense(4) for this installation, to first create a user’s facial model in a calibration and then track the face very accurately.All data is collected over a network on one computer, pre-processed in an interface program in Unity(4), and finally used in Max/MSP for synthesis and game logic.

Currently there are three pieces available: Ave Maria (Franz Schubert: Ellen’s Third Song, D. 839, Op. 52, No. 6.), Nessun Dorma (Giacomo Puccini: Turandot, Act 3: Nessun Dorma, Calaf) and La Donna È Mobile (Giuseppe Verdi: Rigoletto, Act 3: La donna est mobile, Duke of Mantua). All three pieces include accompaniment music, a table of the keys occurring in the piece, and the vocal melody. An algorithm helps the user to sing the correct melody as easily as possible. If he deviates with his movements from the melody line, the table of the keys is consulted to produce a melody that fits as good as possible to the piece.

The installation has already been shown at several events and conferences and has always received positive feedback. The optional lighting control can be added to every available song as it is connected to the volume and pitch of the singing voice. Crowd participation is so far only available with the song La Donna È Mobile.

Links

- https://cycling74.com/products/max/

- https://neuronmocap.com/

- https://facegen.com/

- https://en.wikipedia.org/wiki/PrimeSense

Publications

Jochen Feitsch, Marco Strobel, Christian Geiger: CARUSO Singen wie ein Tenor. Mensch & Computer Workshopband 2013: 531-534

Jochen Feitsch, Marco Strobel, Christian Geiger: Caruso: augmenting users with a tenor’s voice. AH 2013: 239-240

Jochen Feitsch, Marco Strobel, Christian Geiger: Singing Like a Tenor without a Real Voice. Advances in Computer Entertainment 2013: 258-269

Jochen Feitsch, Marco Strobel, Stefan Meyer, Christian Geiger: Tangible and body-related interaction techniques for a singing voice synthesis installation. Tangible and Embedded Interaction 2014: 157-164

Cornelius Poepel, Jochen Feitsch, Marco Strobel, Christian Geiger: Design and Evaluation of a Gesture Controlled Singing Voice Installation. NIME 2014: 359-362